Criminal networks in Southeast Asia are increasingly turning to artificial intelligence tools, including ChatGPT, to power large-scale online fraud schemes, according to new findings.

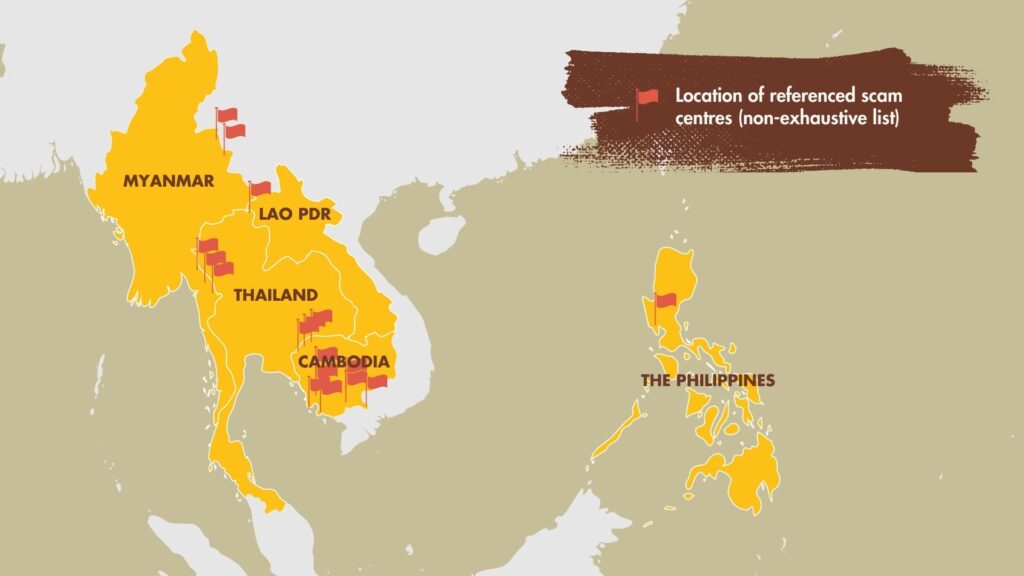

Scam compounds operating along the Myanmar–Thailand border are reported to be holding trafficked workers under harsh conditions, forcing them to run elaborate cyber scams. Many victims were lured with false promises of legitimate jobs abroad, only to be abducted and coerced into digital fraud operations.

One such victim, Duncan Okindo from Kenya, accepted what he believed was a customer service position in Thailand. Instead, he was taken to a compound known as KK Park, where workers were compelled to pose as Americans online. Using ChatGPT, they created convincing personas—such as ranchers or soybean farmers—to win the trust of unsuspecting targets before pushing them toward fraudulent cryptocurrency investments.

Supervisors issued strict scripts detailing when to raise topics like real estate, when to shift the conversation to digital assets, and how to manage multiple conversations at once. ChatGPT was exploited to provide fast, natural-sounding replies, making the deception more effective and difficult to identify.

Inside these compounds, workers faced relentless pressure to meet quotas. Failure often meant punishment, ranging from beatings to public humiliation. Survivors have described the experience as a form of modern slavery marked by physical abuse and psychological torment.

Okindo eventually managed to escape after Thai authorities disrupted operations at KK Park. However, upon returning home to Kenya, he continues to endure stigma, threats, and financial struggles—proof that the trauma of these scams extends far beyond the compounds themselves.

The developers of ChatGPT have stated that their systems are designed with safeguards against fraud and that they act swiftly to block misuse when detected. Yet experts caution that AI-driven deception is advancing more rapidly than the mechanisms designed to contain it.

Human rights advocates warn that the intertwining of human trafficking and cyber fraud is becoming a global crisis. They are calling for governments, technology companies, and international agencies to strengthen protections, coordinate law enforcement, and support survivors.

The rise of AI has brought countless benefits, but this case highlights its darker side: for thousands trapped in scam compounds, artificial intelligence has become both a tool of exploitation and a weapon of deceit.

White Horse Daily Analysis

The rise of AI is often celebrated for its potential to transform industries and empower people. Yet, as this case shows, innovation without accountability comes with a human cost. Thousands are trapped in compounds like KK Park, turned into unwilling agents of fraud.

The world faces a dual responsibility: protect consumers from scams and protect victims who are coerced into committing them. If governments and technology providers fail to act decisively, Southeast Asia’s scam compounds could become the blueprint for a new kind of organized crime—AI-driven, borderless, and deeply exploitative.

Leave a comment